Social media companies face a legal reckoning this year as juries begin hearing the social media addiction lawsuits.

The long-awaited wave of cases will determine whether companies like Meta, YouTube, TikTok and their compatriots can face legal consequences for creating defective products.

The social media addiction lawsuits refer to thousands of civil cases alleging social media companies like Meta, YouTube and TikTok designed and released addictive products without adequate warnings, causing personal and financial injury.

The cases parallel the product liability lawsuits against tobacco companies, which eventually paid $206 billion for damage caused by addictive cigarettes.

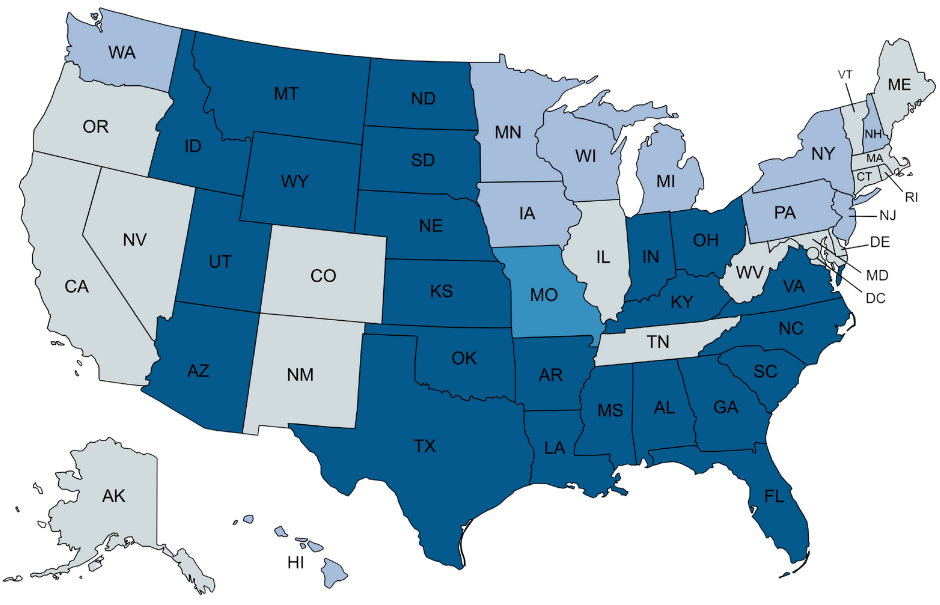

The strongest cases are bundled into two groups — cases filed in federal court and cases filed in California state court. The federal cases primarily represent school districts and states which claim they foot the bill for young people’s social media addiction. The first of these cases will go to trial this summer.

The cases filed in California represent an estimated 1,600 individuals, families and school districts which claim social media addictions caused them personal injury.

The first of these cases — a 2023 suit filed on behalf of a young woman named KGM and several other plaintiffs — began trial proceedings on January 27.

KGM allegedly developed depression, anxiety and body-image issues after years of using YouTube, Instagram, TikTok (formerly Musical.ly) and Snapchat. She also became a victim of sextortion on Instagram, where a predator shared explicit photos of her as a minor.

The New York Post, which reviewed KGM’s complaint, describes her alleged experience with Meta following her victimization:

Predators met their victims on Instagram in nearly half (45%) of all the sextortion reports filed with the National Center for Missing and Exploited Children between August 2020 and August 2023.

Of the scammers who threatened to share explicit photos of minors, 60% threatened to do so on Instagram.

When Instagram launched new tools to address sextortion in October 2024 — long after KGM’s experience — the National Center on Sexual Exploitation (NCOSE) warned:

KGM’s mom allegedly used third-party parental control apps to keep her daughter from using social media. It didn’t work. Per the complaint, Meta, YouTube, TikTok and Snap “design their products in a manner that enables children to evade parental consent.”

Social media “has changed the way [my daughter’s] brain works,” KGM’s mom wrote in a filing reviewed by the Los Angeles Times, continuing:

Snap, the company behind Snapchat, and TikTok settled with KGM in January. Meta and YouTube are expected to proceed with the trial.

KGM’s case is the first of nine California bellwether cases, or cases to determine whether a novel legal theory will hold up in court.

The mystery is whether KGM’s argument can circumvent Section 230 of the Communications Decency Act, which prevents online platforms from being sued for users’ posts.

Social media companies have thus far escaped product liability accusations using Section 230. In a November motion to keep KGM’s case from going before a jury, Meta blamed the harm KGM experience on content posted to Instagram, which it could not be held responsible for.

Judge Carolyn B. Kuhl, who oversees the state cases, ruled against the social media juggernaut, finding KGM had presented evidence indicating Instagram itself — not content posted to Instagram — caused her distress.

“Meta certainly may argue to the jury that KGM’s injuries were caused by content she viewed,” Judge Kuhl wrote. “But Plaintiff has presented evidence that features of Instagram that draw the user into compulsive viewing of content were a substantial factor in causing her harm.”

“The cause of KGM’s harms is a disputed factual question that must be resolved by the jury,” she concluded.

If KGM or any other bellwether case wins, Section 230 may no longer offer unconditional protection for social media companies which behave badly.

The social media addiction cases could send social media companies an expensive message about prioritizing child wellbeing. But parents shouldn’t rely on companies’ altruism (or grudging compliance with court orders) to protect their kids.

Social media is not a safe place for children. Parents should seriously consider keeping their children off it.

To read more of the Daily Citizen’s reporting on the effects of social media on children, read the articles linked below.

Additional Articles and Resources

America Controls TikTok Now—But Is It Really Safe?

X’s ‘Grok’ Generates Pornographic Images of Real People on Demand

Australia Bans Kids Under 16 Years Old From Social Media

TikTok Dangerous for Minors — Leaked Docs Show Company Refuses to Protect Kids

Instagram’s Sextortion Safety Measures — Too Little, Too Late?

Key Takeaways From Zuckerberg’s Tell-All

Zuckerberg Implicated in Meta’s Failures to Protect Children

Instagram Content Restrictions Don’t Work, Tests Show

Surgeon General Recommends Warning on Social Media Platforms

Horrifying Instagram Investigation Indicts Modern Parenting

Survey Finds Teens Use Social Media More Than Four Hours Per Day — Here’s What Parents Can Do

Child Safety Advocates Push Congress to Pass the Kids Online Safety Act

Many Parents Still Fail to Monitor Their Kids’ Online Activity, Survey Shows

‘The Tech Exit’ Helps Families Ditch Addictive Tech — For Good

President Donald Trump, First Lady Sign ‘Take It Down’ Act

First Lady Supports Bill Targeting Deepfakes, Sextortion and Revenge Porn