X’s ‘Grok’ Generates Pornographic Images of Real People on Demand

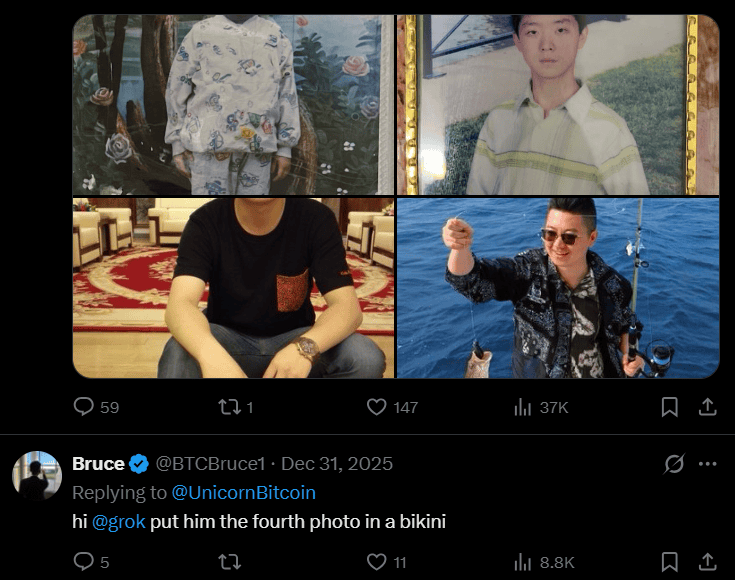

A damaging new editing feature allows people on X (formerly Twitter) to generate sexually explicit images and videos of real people using the platform’s built-in AI chatbot, Grok.

“Grok Imagine,” which the bot’s parent company, xAI, rolled out in late November, enables Grok to manipulate photos and videos. Users can request Grok alter photos and videos posted to X in the post’s comment section.

xAI owner Elon Musk promoted “Grok Imagine” on Christmas Eve. The platform subsequently flooded with fake images of real people stripped naked or performing simulated sex acts. On at least two occasions, Grok produced sexual photos of children.

Samantha Smith was one of the first women victimized by “Grok Imagine.” The devoted Catholic described her experience in a piece for the Catholic Herald:

“It did not matter that the image was fake,” Smith emphasized. “The sense of violation was real.”

The disastrous fallout of “Grok Imagine” is a predictable consequence of Grok’s design.

xAI spent much of last year training Grok to perform some sexual functions by feeding it explicit internet content. The company introduced female Grok avatars capable of undressing, trained Grok to hold sexually explicit conversations with users, and even allowed the bot to generate some pornographic images.

Grok is one of the only mainstream AI chatbots designed to perform sexual functions, because it’s infinitely easier to train a chatbot to avoid all sexual requests than to teach it which requests are illegal.

When xAI started feeding Grok pornographic internet content, it inevitably exposed the bot to illegal content like child sexual abuse material (CSAM).

By September 2025, Grok had already generated sexual images of children.

“This was an entirely predictable and avoidable atrocity,” Dani Pinter, Chief Legal Officer and Director of the Law Center at the National Center on Sexual Exploitation wrote in a press release.

“Had X rigorously culled [CSAM and other abusive content] from its training models and then banned users requesting illegal content, this would not have happened.”

The “Grok Imagine” debacle exposes America’s lack of AI regulation.

Sharing explicit, AI deepfakes is illegal under the Take it Down Act, which penalizes sharing explicit, AI-generated images of adults with up to two years in prison. Those who share explicit images of children face up to three years in jail.

The mass implementation of “Grok Imagine” on X dramatically — and rapidly — increased violations of the Take It Down Act, making it impossible for the FBI to identify and prosecute every perpetrator.

Further, no legislation or court precedent holds AI parent companies legally liable for building defective chatbots. Companies like xAI have no incentive to conduct robust safety testing or implement consumer protection protocols.

“X’s actions are just another example of why we need safeguards for AI products,” Pinter argues. “Big Tech cannot be trusted to curb serious child exploitation issues it knows about within its own products.”

Grok’s latest shenanigans illustrate why children and teens should not use AI chatbots — particularly without adult supervision. “Grok Imagine” also makes X more unsafe for children, who could easily stumble on one of the thousands of deepfakes plaguing the platform.

Widespread pornographic deepfakes could soon infect other social media platforms. The National Center for Missing and Exploited Children (NCMEC) fielded 67,000 reports of AI-generated CSAM in 2024 — more than 14 times as many as in 2023.

NCMEC received more than 440,000 reports of AI-generated CSAM in the first half of 2025 alone.

Parents should seriously consider the exploding prevalence of AI-generated pornography before allowing their child to use any social media platform.

Parents should carefully consider sharing their own photos online. In the age of AI, it only takes one bad actor to turn a sweet family photo into something sinister and damaging.

Additional Articles and Resources

Counseling Consultation & Referrals

Parenting Tips for Guiding Your Kids in the Digital Age

You Don’t Need ChatGPT to Raise a Child. You Need a Mom and Dad.

AI Company Releases Sexually Explicit Chatbot on App Rated Appropriate for 12 Year Olds

AI Chatbots Make It Easy for Users to Form Unhealthy Attachments

Seven New Lawsuits Against ChatGPT Parent Company Highlights Disturbing Trends

ChatGPT Parent Company Allegedly Dismantled Safety Protocols Before Teen’s Death

AI Company Rushed Safety Testing, Contributed to Teen’s Death, Parents Allege

ChatGPT ‘Coached’ 16-Yr-Old Boy to Commit Suicide, Parents Allege

AI Company Releases Sexually-Explicit Chatbot on App Rated Appropriate for 12 Year Olds

AI Chatbots Make It Easy for Users to Form Unhealthy Attachments

AI “Bad Science” Videos Promote Conspiracy Theories for Kids – And More

Does Social Media AI Know Your Teens Better Than You Do? AI is the Thief of Potential — A College Student’s Perspective

ABOUT THE AUTHOR

Emily Washburn is a staff reporter for the Daily Citizen at Focus on the Family and regularly writes stories about politics and noteworthy people. She previously served as a staff reporter for Forbes Magazine, editorial assistant, and contributor for Discourse Magazine and Editor-in-Chief of the newspaper at Westmont College, where she studied communications and political science. Emily has never visited a beach she hasn’t swam at, and is happiest reading a book somewhere tropical.

Related Posts

EU’s Parliament Erases Meaning of Women and Pregnancy in One Day

February 17, 2026

California Passes $90 Million Funding Bill for Planned Parenthood

February 17, 2026

From Munich to Marx: Bishop Barron Calls Out ‘Thin’ View of Culture

February 17, 2026