Elliston Berry, a teenage victim of explicit AI deepfakes, has helped develop a free program to teach parents and schools how to protect their kids from AI-driven sexual abuse.

A classmate used AI to make explicit, nude images of Berry when she was just 14 years old. The adults at her high school were at a loss over how to protect her.

“One of the situations that we ran into [in my case] was lack of awareness and lack of education,” Berry, now 16, told CNN this week. “[The leaders of my school] were more confused than we were, so they weren’t able to offer any comfort [or] any protection to us.”

“That’s why this curriculum is so important,” she emphasized.

Adaptive Security built the free resource in partnership with Berry and Pathos Consulting Group, a company educating children about AI deepfakes and staying safe from online abuse.

“We partnered with Adaptive to build a series of courses together because we believe now is a critical time to protect our youth against these new AI threats,” Evan Harris, the founder of Pathos and a leading expert in protecting children from AI-driven sexual abuse, explained in a video launching the curricula.

The courses explain:

- What deepfakes are and why they can be harmful.

- When messing around with AI becomes AI-driven sexual abuse.

- How to broach discussions about online sexual exploitation with students and parents.

- How to broach discussions about online sexual exploitation with students and parents.

Schools can generate a personalized version of the curriculum by filling out the form on Adaptive Security’s website.

Adaptive’s free lessons also explain the rights of victims under the Take It Down Act, which President Donald Trump signed into law in May 2025. The law penalizes generating explicit, deepfake images of minors with up to three years in prison. It also requires social media companies scrub nonconsensual intimate images from their platforms within 48 hours of a victim’s request.

Berry, who helped First Lady Melania Trump whip up support for the Take It Down Act, waited nine months for her deepfakes to be taken off the internet.

The Take It Down Act gives victims of AI deepfakes an opportunity to seek justice. Berry, Harris and Adaptive Security CEO Brian Long hope their program will discourage the generation of explicit deepfakes altogether.

“It’s not just for the potential victims, but also for the potential perpetrators of these types of crimes,” Long told CNN, emphasizing:

Parents and educators should not dismiss AI-driven sexual abuse as a rare occurrence.

The National Center for Missing and Exploited Children received more than 440,000 reports of AI-generated child sexual abuse material in the first half of 2025 — more than six times as many as in all of 2024.

In March 2025, one in every eight of the 1,200 13- to 20-year-olds surveyed by the child safety nonprofit Thorn reported knowing a victim of explicit, AI-generated deepfakes.

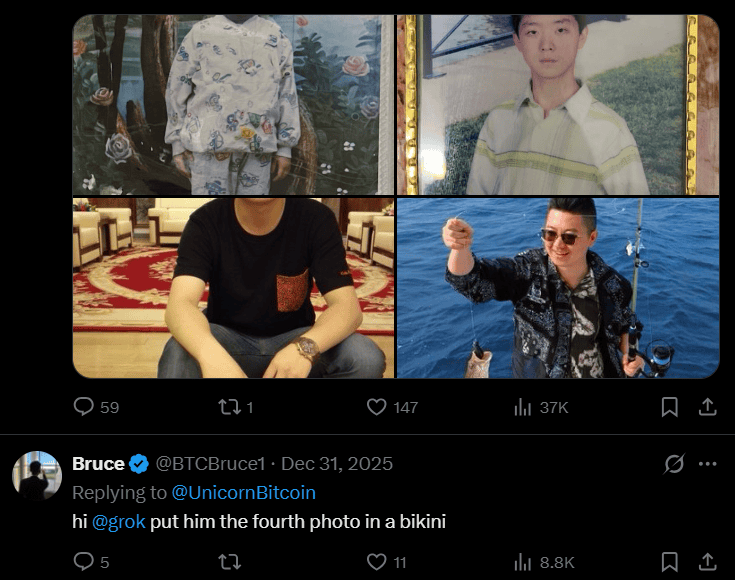

That number is likely higher now — if only because X (formerly Twitter) integrated an AI editing feature in November allowing users to generate explicit images of real people in the comment section.

xAI, the company behind X’s built-in AI chatbot, limited the feature last week after the platform flooded with illegal, AI-generated images.

The Daily Citizen thanks Elliston Berry and other victims of AI-driven sexual abuse for using their experiences to help parents keep their kids safe online.

Additional Articles and Resources

X’s ‘Grok’ Generates Pornographic Images of Real People on Demand

President Donald Trump, First Lady Sign ‘Take It Down’ Act

First Lady Supports Bill Targeting Deepfakes, Sextortion and Revenge Porn